It's the early 80s and you sit at your terminal with a stack of papers, a document holder and a keyboard. Your mission: Enter as many of the paper forms into the terminal as possible. Exciting work, isn't it?

The problem is that this is not an inaccurate way to view data entry today. Granted, a lot of the brute force work has been done and legacy systems exist from which to pull data. Further, the forms are now entered directly into the system as opposed to copied from paper, but as regards entering novel data the situation has changed little.

The other major problem is that this type of raw entry, which is generally entering data into a form, only captures defined phenomena. The data that is being entered, especially into a form, is often classified and defined in advance. There is no elasticity to what can be captured.

This is problematic in that you must have a clear picture of what you are capturing in advance. For hard problems and complex situations you very rarely know much, if anything, in advance. If your only valid form of capturing data is via traditional predefined methods, such as forms, then your ability to capture data, and eventually knowledge, is vastly compromised.

This revelation is nothing new, of course. People have been trying to innovate data entry and knowledge capture for several decades. But, what other types of data can be captured and how?

The Army is asking this exact question, if indirectly. In reading several SBIRs the concept of capturing the knowledge inherent in soldiers heads is coming to the forefront. It is being recognized that not only do experts have valid perspectives and answers, the boots on the ground do, as well (keep in mind, this is probably not a new perspective in the military, but is one that I have seen in several SBIRs recently). Beyond that, though, they are starting to explore how to bring that knowledge into existing systems.

The how of this is a serious question. Computer systems today are clearly defined and generally purposeful to a single end. Human thought, on the other hand, is often multi-purposed and the field of understanding human thinking (philosophy) has been around for as long as humans and has yet to reach one shared conclusion on how we think. Even if we could get some Matrix-like data jack implanted into soldiers heads could we really transfer the knowledge as it is represented inside their brains into a computer system?

Perhaps we need another way of gathering data since it would seem that direct access to the human mind would avail us little. There is a new(-ish) movement which is providing an answer: social media.

Probably one of the most useful aspects of social media is how important and interesting knowledge percolates to the top. This is done in various ways. For instance, if I see an interesting tweet on Twitter, I will retweet it. If I read something worthwhile on Facebook, I may comment on it or repost it. It's this interaction with the content that causes the interesting bits to rise to the top.

The exciting thing, at least from a systems perspective, is that this is self-organizing behavior. It is through the interaction of the components of the system (here, the components are the people) that the interesting bits are being obtained. While it may be difficult to capture human thought and knowledge in its native form, it's not as difficult to capture the important pieces as they are being defined by the social system already.

Further, the nature of social media, in that it tends to interact in bite-sized, discrete pieces, means that the computer system needs not have much understanding of what it is capturing at all. The knowledge is already distilled into its core component, often with attribution, and the computer system merely need remember it. It can be stored without pre-defined labels and fields.

The thing which the computer system must crucially provide is a robust search capability. Whether this search capability is enacted after the fact, or whether there is a component of the system which searches as knowledge comes in is immaterial. As long as the system can search through the knowledge is what's important.

Eventually, this captured knowledge can be used and reused as more people interact with it. Each interaction would in essence refine the knowledge, making it more useful to the computer system and the people in general.

Wednesday, December 30, 2009

Tuesday, November 10, 2009

Git vs. SVN (I know, right, another one?!?!)

I've been endeavoring to set up a code repository (or even a document repository, if that need should arise) and have been weighing the merits of both Git and SVN.

At the very heart of the comparisons lies the manner in which Git and SVN operate. SVN is a central repository. When you "checkout" a file in SVN, you get only the most recent version. Should you need to do backwards comparisons you must communicate with the server for this. You get no history, either. SVN relies on the availability of the central repository to operate.

Git, on the other hand, is fully distributed. There is generally a "blessed repository" from which everyone will start and ultimately commit to, but when you "clone" that repository you get a full copy of it. Backing up a Git repository with many contributors is actually trivial as there are countless copies of that repository floating around.

Branching

Another major difference revolves around branching. In Git, branching is a way of life (as is the subsequent merging of branches). You want to develop a new feature? Branch on your local box and work on it there, then merge it back into your local main repository before committing back to the blessed repository.

This is not so in SVN. Branching is not done as often (nor as easily). Branching must occur in the central repository and is not a way of life. In this area Git outshines SVN.

Client Tools

One area where Git does not outshine SVN is in the client tools. SVN has been around forever (in digital terms). There are very elegant clients for SVN (such as TortoiseSVN) which allow for an incredible ease of use when working with repositories. Further, most modern IDEs have SVN repository manipulation as a core capability. There are several options for working with SVN in Eclipse, for instance, one of which is core to Eclipse itself.

Git, on the other hand, is young. The tools out there are not nearly as elegant nor are they as wide-spread. What's worse, Git is incredibly Linux centered. There are two Windows clients for Git (with the advent of JGit, that will climb to three), all of which require one to work with the command line. Some GUI projects, such as TortoiseGit, are in the works but will not be ready for prime-time for a while. The last issue here is that there is only limited integration with IDEs. With time, these situations will change, but for now it is a major draw-back to adoption by those other than the most determined.

Ease of Setup

To the end that I would like to work with both systems I decided to set up both on our Windows Server 2003 server. I chose to use Cygwin and OpenSSH, along with Gitosis (a Perl mod for Git), for Git. I used Shannon Cornish's tutorial to set things up (along with a little help from scie.nti.st on matters Gitosis). This turned out to be a rather easy and relatively painless way to go about things.

The basic gist is that you install Git when you install Cygwin then install and setup OpenSSH (by far the most difficult part). At this point you can connect to the server using SSH and clone any repository you would like. Installing Gitosis on top of things (recursively using Git, no less, which is so cool in my book) allows you to use public/private key pairs to authenticate users. You can then use Git to clone the control repository of Gitosis and admin the system remotely. Very elegant and one which doesn't require the anticipated user to have to input a password or create an account on the server.

Setting up SVN was more difficult. The differences, though, are myriad. While the above Git scheme works on SSH the method I chose to use for SVN works over HTTP/HTTPS, which has advantages all of its own. I worked off of several tutorials, but the most significant was this tutorial.

The real difficult part here is that you have to rely on Apache. It seems a bit overkill to have to install Apache and get it running in order to serve up your repository, but this is the accepted way of doing things. Once you have it running you must still log into the server to create a username/password combo for any user that wants to use the system, and you must also log in to the server in order to administer the repository.

The Best of Both Worlds

I have to say that the thing which gets me most excited about Git is the notion of branching it carries with it. I really like the thought of creating a local branch for every new feature. It seems natural to me.

On the other hand, I don't think that I want to saddle everyone else around me with command line tools and vi if they want to work with our repositories. So, can a compromise be made?

In fact, it can! Git has the wonderful ability to clone and commit to SVN repositories. The real details are outlined here by Clinton R. Nixon. In this way, I can take the pain of the command line on myself without foisting it on anyone else, but I also get all of the wonderful features Git brings with it.

Conclusion

In light of all of this, we will be hosting our repositories using SVN. However, I will be keeping an eye towards the maturity of the Git clients. If they should ever advance to the level where any "power user" can attain them, then we very well might switch.

At the very heart of the comparisons lies the manner in which Git and SVN operate. SVN is a central repository. When you "checkout" a file in SVN, you get only the most recent version. Should you need to do backwards comparisons you must communicate with the server for this. You get no history, either. SVN relies on the availability of the central repository to operate.

Git, on the other hand, is fully distributed. There is generally a "blessed repository" from which everyone will start and ultimately commit to, but when you "clone" that repository you get a full copy of it. Backing up a Git repository with many contributors is actually trivial as there are countless copies of that repository floating around.

Branching

Another major difference revolves around branching. In Git, branching is a way of life (as is the subsequent merging of branches). You want to develop a new feature? Branch on your local box and work on it there, then merge it back into your local main repository before committing back to the blessed repository.

This is not so in SVN. Branching is not done as often (nor as easily). Branching must occur in the central repository and is not a way of life. In this area Git outshines SVN.

Client Tools

One area where Git does not outshine SVN is in the client tools. SVN has been around forever (in digital terms). There are very elegant clients for SVN (such as TortoiseSVN) which allow for an incredible ease of use when working with repositories. Further, most modern IDEs have SVN repository manipulation as a core capability. There are several options for working with SVN in Eclipse, for instance, one of which is core to Eclipse itself.

Git, on the other hand, is young. The tools out there are not nearly as elegant nor are they as wide-spread. What's worse, Git is incredibly Linux centered. There are two Windows clients for Git (with the advent of JGit, that will climb to three), all of which require one to work with the command line. Some GUI projects, such as TortoiseGit, are in the works but will not be ready for prime-time for a while. The last issue here is that there is only limited integration with IDEs. With time, these situations will change, but for now it is a major draw-back to adoption by those other than the most determined.

Ease of Setup

To the end that I would like to work with both systems I decided to set up both on our Windows Server 2003 server. I chose to use Cygwin and OpenSSH, along with Gitosis (a Perl mod for Git), for Git. I used Shannon Cornish's tutorial to set things up (along with a little help from scie.nti.st on matters Gitosis). This turned out to be a rather easy and relatively painless way to go about things.

The basic gist is that you install Git when you install Cygwin then install and setup OpenSSH (by far the most difficult part). At this point you can connect to the server using SSH and clone any repository you would like. Installing Gitosis on top of things (recursively using Git, no less, which is so cool in my book) allows you to use public/private key pairs to authenticate users. You can then use Git to clone the control repository of Gitosis and admin the system remotely. Very elegant and one which doesn't require the anticipated user to have to input a password or create an account on the server.

Setting up SVN was more difficult. The differences, though, are myriad. While the above Git scheme works on SSH the method I chose to use for SVN works over HTTP/HTTPS, which has advantages all of its own. I worked off of several tutorials, but the most significant was this tutorial.

The real difficult part here is that you have to rely on Apache. It seems a bit overkill to have to install Apache and get it running in order to serve up your repository, but this is the accepted way of doing things. Once you have it running you must still log into the server to create a username/password combo for any user that wants to use the system, and you must also log in to the server in order to administer the repository.

The Best of Both Worlds

I have to say that the thing which gets me most excited about Git is the notion of branching it carries with it. I really like the thought of creating a local branch for every new feature. It seems natural to me.

On the other hand, I don't think that I want to saddle everyone else around me with command line tools and vi if they want to work with our repositories. So, can a compromise be made?

In fact, it can! Git has the wonderful ability to clone and commit to SVN repositories. The real details are outlined here by Clinton R. Nixon. In this way, I can take the pain of the command line on myself without foisting it on anyone else, but I also get all of the wonderful features Git brings with it.

Conclusion

In light of all of this, we will be hosting our repositories using SVN. However, I will be keeping an eye towards the maturity of the Git clients. If they should ever advance to the level where any "power user" can attain them, then we very well might switch.

Wednesday, October 7, 2009

A Thought on Environments: Portability

My friend asked me yesterday what I thought of the Kindle. My response was that I was a fan of actually holding a book, feeling the paper, reading in a full fidelity mode. I spoke of how tired my eyes could become from reading on a screen all day. I made a decent case for not adopting the Kindle.

Then, on a whim, I checked out the Kindle app for the iPhone and immediately found myself sucked in.

The first thing that did it was the free availability of a book that we have all been discussing here at the office, Bertrand Russell's "The Problems of Philosophy". Turns out that it is a "classic" and Amazon offers many of the classics for free. I have now downloaded 10 free classics for my iPhone Kindle app and am well on my way to finding book reading Nirvana.

Free content put the hook in my mouth, but what set it was the concept of WhisperSync. WhisperSync is a service that Amazon offers which will sync your content between devices. Now, this is not just the raw content, this is the detailed content, the state content.

For instance, let's say that I am on page 50 of "The Problems of Philosophy" on my iPhone. Further, let us say that I have a Kindle at home on which I do the bulk of my reading. As I read on the iPhone, the Kindle app updates the state for that book. When I get home and fire up my (physical) Kindle my place in the book comes right up (in computer terms, the state is restored). No futzing around with finding my place, everything is just magically the same.

This got me thinking about the synchronicity of environments. In our research the environment you work in is of paramount importance. That environment can be unique to you, or you can share it with others. Everyone can have their own, if necessary. The environment is at least partially reflected in software.

One thing Dr. Sousa-Poza and I talk about a lot is the ability to "save" environments. Environments should be transportable and shareable. If you need to see what I see then you should be able to load up a copy of my environment, see things just as I see them with the data I've been using. What's more, your environment should be able to subsume my environment! Environments should be nestable yet discrete.

WhisperSync brings an interesting possibility to my mind. Shouldn't the environmental changes that I enact on one device translate to another device? What if I access my environment from my iPhone and then switch to my laptop or a web browser? Shouldn't the environment be exactly the same?

This necessitates two things: A place of storage (centralized or decentralized, makes no difference) that all environments have in common and the ability to capture state.

Ideally, the system would operate like this (from the 10,000 foot view):

Then, on a whim, I checked out the Kindle app for the iPhone and immediately found myself sucked in.

The first thing that did it was the free availability of a book that we have all been discussing here at the office, Bertrand Russell's "The Problems of Philosophy". Turns out that it is a "classic" and Amazon offers many of the classics for free. I have now downloaded 10 free classics for my iPhone Kindle app and am well on my way to finding book reading Nirvana.

Free content put the hook in my mouth, but what set it was the concept of WhisperSync. WhisperSync is a service that Amazon offers which will sync your content between devices. Now, this is not just the raw content, this is the detailed content, the state content.

For instance, let's say that I am on page 50 of "The Problems of Philosophy" on my iPhone. Further, let us say that I have a Kindle at home on which I do the bulk of my reading. As I read on the iPhone, the Kindle app updates the state for that book. When I get home and fire up my (physical) Kindle my place in the book comes right up (in computer terms, the state is restored). No futzing around with finding my place, everything is just magically the same.

This got me thinking about the synchronicity of environments. In our research the environment you work in is of paramount importance. That environment can be unique to you, or you can share it with others. Everyone can have their own, if necessary. The environment is at least partially reflected in software.

One thing Dr. Sousa-Poza and I talk about a lot is the ability to "save" environments. Environments should be transportable and shareable. If you need to see what I see then you should be able to load up a copy of my environment, see things just as I see them with the data I've been using. What's more, your environment should be able to subsume my environment! Environments should be nestable yet discrete.

WhisperSync brings an interesting possibility to my mind. Shouldn't the environmental changes that I enact on one device translate to another device? What if I access my environment from my iPhone and then switch to my laptop or a web browser? Shouldn't the environment be exactly the same?

This necessitates two things: A place of storage (centralized or decentralized, makes no difference) that all environments have in common and the ability to capture state.

Ideally, the system would operate like this (from the 10,000 foot view):

- I access my environment and make some change to its state.

- That change is transmitted to the "server" which keeps track of environment state. ("server" is in quotations as a means to capture an idea. It need not be an actual server. It might be better to think of it as a state oracle)

- I switch to a different device.

- As I start up my environment on the new device the state is restored from the "server".

- I continue my work.

Monday, August 10, 2009

An Analyst's Development Environment

Here in the land of academic research we're working with a "new" take on mashups. It seems like a no-brainer to me but a lot of people have expressed interest and surprise when I explain to them what we're doing. For now let's call it an analyst's development environment (ADE).

One thing that mashups are really, really good at is taking disparate data sources and allowing "momentary" relationships in the sources to be created. This in effect creates a new data source that is a fusion of the inputs. As is often the case in fusions, this new source tends to be more than just the sum of the parts. You often come up with new views on the data as you add extra sources.

Most people stop here at the fusion stage. Once they have the new view onto the data they rely on other tools outside the scope of a mashup to do interesting things. They might pipe that data into a tool such as Fusion Charts in order to visualize it or they might pipe it into an analysis tool such as a model or sim. But, why do they need to leave the scope of the mashup to do this? What if that analysis or the creation of the Fusion Charts XML was an automated part of the mashup itself?

Mashups deal with web services primarily (though there are some nifty products out there that allow you to mash more than just web services). A web service is usually considered to be a data source. But, in practice they are much more than that. Consider all of the specialized web services provided by Google for geolocation or Amazon for looking up aspects of books. The simplest example I can give you is Google's web service which converts an address to a lat and long pair (called geocoding). With these in mind let's take a different look at web services. Let's look at them as processing units.

A processing unit has 3 criteria: it takes input; does something interesting with that input; and provides output. Processing units are the basis of modern programming. They're known as methods, functions, procedures, etc. depending on context. We can most often build bigger processing units from simpler units.

Web services fit these 3 criteria handily. You can easily provide input, they can easily do something interesting with that input and then just as easily provide output. All communication is done in a standardized protocol driven environment.

The interesting thing about web services is that we can string them together (with the right tools) rather easily into processes. That's exactly what we're doing here. Each web service is either a data source or a processing unit. Given the ability to ferry data from one web service to the next (in an easy way) it is possible to create mashups that do more than just mash data. They actually do some form of processing.

Consider what it would be like if you had a web service endpoint attached to a model? You could pre-mash your data from various sources then run it all through the model and create a new output that would be very interesting. It would be so easy.

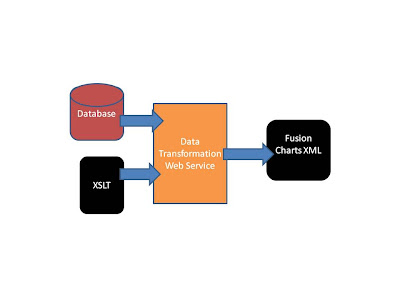

Using Presto we recently put together a demo which worked along these lines. It made our demo come together in several weeks rather than over several months. We used Presto to access databases then ferried that data (in XML format) into a custom built web service that took said data and ran XSL transforms on it. That produced Fusion Charts XML which we then piped into our presentation layer for visualization. It was easy.

Here is a diagram of what the actual flow of the mashup was.

Here is a screen shot of the actual chart produced by the generated Fusion Charts XML.

An ADE would work in a similar way. Using provided tools which allow for ferrying of data from one endpoint to another and given a grab-bag of analysis and transformation web services an analyst could create some amazing things with little effort or technical know-how. The only developer support would be in the creation of any custom web services. It could be a very powerful tool.

Labels:

analyst,

Fusion Charts,

gadget,

mash-ups,

presto,

web services,

XSLT

Wednesday, July 22, 2009

The Walled Garden

Let me hereby declare that I love my iPhone. It is useful and wonderful and keeps me connected all the time. I have been using it in lieu of my computer at home for quite some time now. I write emails on it, craft witty 140 character tweets on a regular basis, listen to books on iPod and even play extremely enjoyable games. It is a great experience.

Let me hereby declare that I love my iPhone. It is useful and wonderful and keeps me connected all the time. I have been using it in lieu of my computer at home for quite some time now. I write emails on it, craft witty 140 character tweets on a regular basis, listen to books on iPod and even play extremely enjoyable games. It is a great experience.I have, however, begun to chafe under the strictures placed on my iPhone by both AT&T and Apple (often in conjunction with each other).

My gripes against AT&T are especially aggravating. I pay them enough money as it is for the privilege of using my iPhone, why do I need to pay them even more in order to use my iPhone as a modem? It does not seem fair that I will have to shell out an additional $30/month to do what is freely available on other, older and less capable smart phones.

What's worse, if AT&T sees an app as competitive to it's business model, it will limit that app, or flat out deny it! Consider Skype: Skype offers free calls over the Internet to other Skype users, yet AT&T will not allow Skype to make calls over its 3G or Edge networks. They pull the undue competition card.

On the Apple front, a nifty app came to my attention recently that I thought was a truly innovative and awesome use of the iPhone. Given an iPhone 3GS (with its video capabilities, compass and GPS) an "Augmented Reality" app has been developed called TwitARound.

TwitARound looks at the tweets from Twitter in your area and plots them on a map. The AR part, though, comes when you hold your phone up. The app takes your GPS position and your bearing from the compass and lays the tweet on the screen. So, as you move in a circle with your iPhone in front of your face, you can see the actual locations on your iPhone of the tweets as they would appear if the tweets were layered over real life. It's quite awesome and I would like to see more apps like this.

However, because TwitARound accesses APIs which Apple has not, but should have, made public, it cannot be published in the iTunes store.

Apple plays the non-public API card too much. For instance, they did not make their "find my phone" APIs public so that they could charge you a monthly fee through mobileMe. There are already jailbroken apps which can do this, but since they didn't make the APIs public, you won't see legitimate apps show up in the app store.

Call me naive or non-business-savvy, but all of this seems like bad business to me. As a consumer, I want freedom. It's my device, I should be able to do with it as I choose.

So, while I love my iPhone, I chafe. Yes, I chafe.

Update: (on 7/29/09)

First off, it turns out that Apple will release the video camera APIs with iPhone OS 3.1 (per Ars here). Yay for Apple on this one. It's good to see that some of the "hidden" functionality is being exposed. Now, let's see if they expose the "find my phone" API or if they milk it for more money.

Secondly, the app denial shenanigans continue. In a story here (also on Ars) it appears that all apps relating to Google Voice are being pulled and any apps which feature Google Voice are being denied. The scuttlebutt is that AT&T is pulling the strings here. Some disagree, but my vote goes towards AT&T.

The Dark Side of Twitter

I've seen a very interesting phenomenon going on in the Twitter-verse recently. It has brought to my attention that Twitter (and micro-blogging in general) can be used for reasons that are not above-board. What, pray-tell, is this dark and nefarious phenomenon?

I've seen a very interesting phenomenon going on in the Twitter-verse recently. It has brought to my attention that Twitter (and micro-blogging in general) can be used for reasons that are not above-board. What, pray-tell, is this dark and nefarious phenomenon? I keep getting followed by prostitutes.

The first time it happened I just thought it was some random individual with a sick sense of self. However, the next day, another woman of the same ilk followed me, and the next day another. That's when I started getting curious (not about what the women offered, but about what was really going on).

Invariably, they all posted a provocative picture of a woman with at least one post which was anywhere from lewd to slightly suggestive. That post would have a link attached. The link takes you to some triple-X "dating" service. Within a couple of days the account is shut down (you get the "Nothing to see here, move along" message when you try to visit the account).

No doubt, for some reason I am not aware of my twitter user name has been picked up by this "dating" service and they keep following me with fake accounts, all in vain hopes of promoting their "service". It's all at least partly automated, it has to be, and there's probably one person sitting behind a desk creating profiles then running those profiles through some tool they had custom made to follow a few thousand people.

The practice, though, really brings questions to my mind about what twitter can't be used for. If it can be used for prostitute marketing, why not black-market marketing or subversive political marketing? Why even marketing at all? I once had the privilege of speaking with an individual that detailed how an anarchist group used Twitter to attempt to disrupt the RNC in Colorado.

Of course, far from being upset by all of this I tend to think of this as rather ingenious. What uses can Twitter serve? What's the most creative use any of you have seen?

Monday, April 27, 2009

JavaScript: Callbacks in Loops

I just finished a mashup that had to be blogged about. I suffered to find this solution, and I wanted to share what I learned with the world.

In the mashup I took a twitter feed and plotted the tweets onto a map based on the location of the tweeter. Let me set the stage.

The Google Map has already been set up and the list of tweets has been obtained. It is now time to plot the tweets onto the map. This will be done within a function called addMarkers. The HTTP Geocoder that Google provides will be doing our geocoding. For more information on this service, see this.

Keep in mind that I'm doing all of this in a Presto Mashlet, and will be calling out to the HTTP Geocoder via a URLProxy call that is undocumented but available for use.

At first blush, the following approach seems appropriate. Here is an excerpt from the addMarkers function:

However, this suffers from a very serious drawback, and that drawback revolves around the scope of the function as it exists on the stack. Remember that you are calling out and receiving an asynchronous response via the callback. There's no telling where this loop will be when a callback returns, but the scope of the function is maintained on the stack until all of the callbacks have been completed.

When a callback returns, the current value of i will be used to index into tweets! Since all of these calls take time, the most common result is that i will actually be out of bounds of tweets. Recall that updating the loop variable is the last operation done in any JavaScript for loop. Once you have looped through all of your indexes you, of necessity, must set i to be out of bounds of tweets. Therefor, i will be equal with tweets.length.

The result is that you pass an undefined object into placeMarker in place of what should have been the tweet.

The next logical step is that you should create a variable to hold the value of i, like this:

var myTweet = i;

...

this.placeMarker(point, tweets[myTweet]);

However, this will fail as well!

The problem here is that myTweet is still within the scope of our addMarkers function. addMarkers will therefor have only one copy of myTweet. Once again, you end up in a situation where the loop will probably finish before any of the callbacks return. The net result this time, however, is slightly different. You will pass in a valid tweet to placeMarkers, but it will be the last tweet in every instance. You'll have the same tweet attached to all of your markers on the map, the last tweet in the list.

So, how do you remove the timing issues? This is where I suffered. I hunted and pecked out half-solutions for quite a while. Finally, I had to start thinking outside of the normal box to come up with a solution.

The whole problem revolves around all of the callbacks returning to a shared scope in the stack, that being the scope of addMarkers. Once you consider it that way, it becomes obvious that providing each callback with its own scope on the stack is what is needed. The way to do that is to have a function fire off the HTTP Geocoder request. The function will get its own spot on the stack and will have its own scope. Let addMarkers maintain the loop and call this function whenever it wants to fire off a request. Pass in the tweets and the desired value of i to be remembered.

Consider the following:

This approach will result in the correct tweet being displayed with the correct marker on the map.

This approach will result in the correct tweet being displayed with the correct marker on the map.

In the mashup I took a twitter feed and plotted the tweets onto a map based on the location of the tweeter. Let me set the stage.

The Google Map has already been set up and the list of tweets has been obtained. It is now time to plot the tweets onto the map. This will be done within a function called addMarkers. The HTTP Geocoder that Google provides will be doing our geocoding. For more information on this service, see this.

Keep in mind that I'm doing all of this in a Presto Mashlet, and will be calling out to the HTTP Geocoder via a URLProxy call that is undocumented but available for use.

At first blush, the following approach seems appropriate. Here is an excerpt from the addMarkers function:

However, this suffers from a very serious drawback, and that drawback revolves around the scope of the function as it exists on the stack. Remember that you are calling out and receiving an asynchronous response via the callback. There's no telling where this loop will be when a callback returns, but the scope of the function is maintained on the stack until all of the callbacks have been completed.

When a callback returns, the current value of i will be used to index into tweets! Since all of these calls take time, the most common result is that i will actually be out of bounds of tweets. Recall that updating the loop variable is the last operation done in any JavaScript for loop. Once you have looped through all of your indexes you, of necessity, must set i to be out of bounds of tweets. Therefor, i will be equal with tweets.length.

The result is that you pass an undefined object into placeMarker in place of what should have been the tweet.

The next logical step is that you should create a variable to hold the value of i, like this:

var myTweet = i;

...

this.placeMarker(point, tweets[myTweet]);

However, this will fail as well!

The problem here is that myTweet is still within the scope of our addMarkers function. addMarkers will therefor have only one copy of myTweet. Once again, you end up in a situation where the loop will probably finish before any of the callbacks return. The net result this time, however, is slightly different. You will pass in a valid tweet to placeMarkers, but it will be the last tweet in every instance. You'll have the same tweet attached to all of your markers on the map, the last tweet in the list.

So, how do you remove the timing issues? This is where I suffered. I hunted and pecked out half-solutions for quite a while. Finally, I had to start thinking outside of the normal box to come up with a solution.

The whole problem revolves around all of the callbacks returning to a shared scope in the stack, that being the scope of addMarkers. Once you consider it that way, it becomes obvious that providing each callback with its own scope on the stack is what is needed. The way to do that is to have a function fire off the HTTP Geocoder request. The function will get its own spot on the stack and will have its own scope. Let addMarkers maintain the loop and call this function whenever it wants to fire off a request. Pass in the tweets and the desired value of i to be remembered.

Consider the following:

This approach will result in the correct tweet being displayed with the correct marker on the map.

This approach will result in the correct tweet being displayed with the correct marker on the map.

Labels:

google maps,

javascript,

mashlet,

mashups,

twitter

Wednesday, April 15, 2009

Como Se Llama?

Originally, I created my twitter account with the handle @jitlife. Obviously, jitlife is my blog, so I thought it made sense. After all, I want people reading my blog, right? Twitter seemed like a good pointer to my blog.

However, I started rethinking this mindset and eventually asked myself this question: Am I marketing my blog, or am I marketing me? By being @jitlife, I was marketing my blog. Therefor, I determined to change my twitter handle.

In deciding on my new twitter handle, I came up with a few criteria:

- It has to be short

- It has to reference me as an individual

It has to be short as twitter only allows 140 characters. If someone is @replying to me and they have to type in a 15 character handle, well, they'll be less inclined to do so (at least from a mobile device) and they'll also have less space to say what they want to say.

That it must reference me is quite obvious once you realize that I'm marketing myself. The problem here is that all of the obvious references to me were taken! @rollins, @mrollins, @mikerollins, etc. All, gone. Most were taken and had only one or two posts, which is frustrating, but so is life.

Barring the obvious, I decided to get clever. I chose @rollinsio. Briefly, it's a silly name I call myself when I'm talking in a fake Spanish accent but it's also clever in that it could stand for Rollins I/O: perfect for twitter! It's short and it references me (rollins is prominent).

Labels:

personal branding,

professional brand,

twitter

Monday, April 13, 2009

More Effective View Management in Web Pages

One of the research scientists I work for and I have been going 'round and around recently about web desktops. In a web desktop you translate the traditional desktop view into a browser. For examples see Ext's web desktop and this actual web desktop OS. In question was how do you navigate between views of various applications in an efficient manner?

One of the research scientists I work for and I have been going 'round and around recently about web desktops. In a web desktop you translate the traditional desktop view into a browser. For examples see Ext's web desktop and this actual web desktop OS. In question was how do you navigate between views of various applications in an efficient manner?The good professor drew a distinction between how a traditional web application represents a set of views vs. how a desktop represents a set of views. In the traditional web application a set of views is often represented using tabs. You have a tab for each view of the application. Google has taken this idea to the extreme. Consider Google Docs. In Google Docs when you want to open a new document, you open a new tab. You can keep opening new documents (and consequently new tabs) until you have a bazilion of them, at which point navigation becomes a nightmare.

On the other hand, you have how a traditional desktop represents views: new views are organized on the "start bar" (forgive the Windows-centric frame of references) with icons. Each icon may have some text and an image to represent it. When you mouse over a given icon you get a tooltip which provides you more information.

The question then becomes how do you find a particular view when you have many views open?

In the web application you may be lucky enough to have individual titles on each tab, but barring that, you have to click on each tab and work your way through potentially all of them before you find what you want.

In a desktop, though, you often can pick out the view that you want simply by glancing at the images in the icons. At the very least, this will narrow your search down. You can then rely on the titles of the icons in question to further narrow the choices. If you're forced to, you can obtain the tooltips for each icon. Your ultimate last step is to look at each view individually. However, looking at each view individually isn't as bad as looking at each tab as you've already excluded some views out of hand because of the images, titles and tooltips. At the very least, you're certainly going to be looking through fewer views.

Quite clearly the desktop way of searching through views is more effective.

There are other factors to be considered as well. The start bar is static across all views. Being part of the default view of the OS it doesn't go away. You never (or rarely) lose your navigation between views. The same cant' be said with web applications.

Further, the start bar only shows the views that are active. If a list of all possible views is desired, you can click on the actual start button to obtain it. A traditional link list shows all possible views, not just the ones that have been accessed during the current session.

Clearly a more effective way of switching views in web applications is needed.

I propose a tool that adheres to the following rules:

- Each view will be represented by an image, a title and a tooltext

- A space for all active views will be set aside on the page

- A list of all possible views can be called for but is not in available by default

Thursday, April 9, 2009

Building Your Professional Brand: Drink the Kool-aid

Is what you have to say compelling, insightful, interesting or useful? Would you like to get this message out to others? Would you like to receive the acknowledgment of your peers for what you have to say?

If you answer yes to all of the above then you need a professional brand. A professional brand is something that marks you as uniquely you, something that points directly at you in such a way that others recognize you. It's not quite a kind of fame, but it is a way of differentiating yourself from the masses.

I've recently become interested in establishing my own professional brand and I've started looking around at ways to do that. Here are a few of the observations I've made.

You need a soapbox

You have to have some place where you can expound on your ideas to the fullest extent possible. Follow out every thought, every nuance of an argument and feel comfortable doing it. Speak your mind!

This is where your blog comes in. It is your soapbox. You can discuss whatever you like there, but the more erudite and insightful your blogs are, the more folks will come back after the first dose.

However, how do you bring people to your soapbox to partake of the Kool-aid you're doling out?

You need a megaphone

You need some forum wherein you can succinctly give out information that will draw others back to your soapbox. You need something that is light-weight and is easily consumable with a minimum of effort.

You need twitter.

140 characters is not (generally) painful to consume. You can read a tweet and in a split second decide if it's something that your interested in. Thus, if you can craft your tweets to be compelling enough for folks to be interested, then you can use twitter to announce your new blogs.

Of course, this necessitates having a following on twitter, but this is a recursive process. Your first few followers will likely be your friends or those you capture by chance. Consider, though, the phenomenon of the re-tweet. If what you have to say is compelling enough then there is a good chance those that follow you will RT your tweet to those that follow them, and on and on.

Shameless self-promotion is of value here. If you think what you have to say has value, then there's no harm in promoting it. Someone else may find it of value, too. Remember, if it's profound enough for you to blog about, then it's probably profound enough for someone else to read.

But, don't just limit your tweets to self-promotion! Tweet about things that fall in line with your brand or RT information that is compelling in and of its own right. If others begin to see you as a fount of useful information they're likely to buy into your Kool-aid.

Mark Drapeau (@cheeky_geeky) has a great blog about "Expanding Your Twitter Base". His rule of thumb is provide valuable information to others on a regular basis.

Shepherd your following

Once you have others interested in your Kool-aid, you have to take care of them. Shepherd your following by interacting with them and acknowledging them. You can do this by responding to comments on your blog or by RT'ing interesting things that your followers aim at you. The main point is that you have to be involved in as personal a way as possible. If you're involved personally then others will be more inclined to recommend you to those that they know.

Also, don't just fall off the face of the Earth for any lengthy amount of time. You have to keep the Kool-aid flowing! The more often you present new ideas and information, the more likely folks are to come back and see what the latest is. If you only post a blog once every 2 months, well, you're not going to have an easy time building a following. If, however, you are prolific poster and always provide value, you're likely to garner a larger following faster.

Wednesday, April 1, 2009

It's a Transforming Process!

So, right now I'm working on a gadget that takes in generic info and sends out Fusion Charts XML. It's a SOAP service and there will be many service endpoints, but right now there are only 2, one for a simple, single series bar chart, and one for a multi-series "drag node chart" (think network diagram with drag able nodes).

I chose to go about it in a different manner than I've seen a lot of people use for Fusion Charts, though. The prevailing way that I've seen people create Fusion Charts XML is to take the data in on the JavaScript side and create the XML, in string format, in the JavaScript. For this approach, I have only one thing to say: Building XML in JavaScript is less than optimal (translation: it sucks).

So, I decided to go about it in the web service itself. My web service is written in Java and once you get the question into Java a few, more palatable, alternatives suggest themselves. In my web service there are 3 distinct transformations: request object to traditional object; traditional object to simplified XML; simplified XML to Fusion Charts XML.

The request object to traditional object transformation is really the beast. The inputs for the endpoints are comma-delimited strings. A lot of work goes into parsing those strings and putting them into the more traditional object. I have my inputs be comma-delimited strings so that the Presto JUMP requests can invoke them effectively. I could just as easily have one of my endpoints be a direct invocation of the more traditional object, but as I understand it, that's a bit of a bad practice.

Once I have my traditional object the easiest step occurs. In this step I use XStream to serialize the traditional object into a simplified XML. If you've never used XStream, it's very simple, very powerful and I recommend it highly.

The last step is where the real magic happens, though. Here is where I transform the simplified XML into Fusion Charts XML. I use the Saxonica XSLT engine to do the transformation and it's a matter of using the right tool for the right job (with regards to using XSLT to transform XML).

XSLT is designed to transform XML, whether it be from XML to XML or XML to some other language. You write a transformation wherein you process the source XML and then create a document in the desired format. It's really not all that hard to take the simplified XML and transform it into the Fusion Charts XML.

Once I have the Fusion Charts XML document I send it back out of the service in a special response that contains the document in string format and the name of the Fusion Chart swf file that will correctly process that document. When my response arrives at its destination all that needs to be done is input the Fusion Charts XML document into the Flash engine with the correct swf file pulled up and voila, it's all done.

I like this approach in that it moves all of the heavy lifting out of the display side (the mashlet, in my case) and into a much more suitable environment, that being a Java web service. I don't have to do endless string concatenation that is hard to debug inside of the JavaScript presentation layer. As a matter of fact, I can write all of the pieces independently of each other and then put them all together in the end. It's a nice break up of all of the work.

An acknowledgment needs to go to @angleofsight for his help in getting this whole process set up. Without his paving of the way I wouldn't be anywhere near as far along as I am right now.

I chose to go about it in a different manner than I've seen a lot of people use for Fusion Charts, though. The prevailing way that I've seen people create Fusion Charts XML is to take the data in on the JavaScript side and create the XML, in string format, in the JavaScript. For this approach, I have only one thing to say: Building XML in JavaScript is less than optimal (translation: it sucks).

So, I decided to go about it in the web service itself. My web service is written in Java and once you get the question into Java a few, more palatable, alternatives suggest themselves. In my web service there are 3 distinct transformations: request object to traditional object; traditional object to simplified XML; simplified XML to Fusion Charts XML.

The request object to traditional object transformation is really the beast. The inputs for the endpoints are comma-delimited strings. A lot of work goes into parsing those strings and putting them into the more traditional object. I have my inputs be comma-delimited strings so that the Presto JUMP requests can invoke them effectively. I could just as easily have one of my endpoints be a direct invocation of the more traditional object, but as I understand it, that's a bit of a bad practice.

Once I have my traditional object the easiest step occurs. In this step I use XStream to serialize the traditional object into a simplified XML. If you've never used XStream, it's very simple, very powerful and I recommend it highly.

The last step is where the real magic happens, though. Here is where I transform the simplified XML into Fusion Charts XML. I use the Saxonica XSLT engine to do the transformation and it's a matter of using the right tool for the right job (with regards to using XSLT to transform XML).

XSLT is designed to transform XML, whether it be from XML to XML or XML to some other language. You write a transformation wherein you process the source XML and then create a document in the desired format. It's really not all that hard to take the simplified XML and transform it into the Fusion Charts XML.

Once I have the Fusion Charts XML document I send it back out of the service in a special response that contains the document in string format and the name of the Fusion Chart swf file that will correctly process that document. When my response arrives at its destination all that needs to be done is input the Fusion Charts XML document into the Flash engine with the correct swf file pulled up and voila, it's all done.

I like this approach in that it moves all of the heavy lifting out of the display side (the mashlet, in my case) and into a much more suitable environment, that being a Java web service. I don't have to do endless string concatenation that is hard to debug inside of the JavaScript presentation layer. As a matter of fact, I can write all of the pieces independently of each other and then put them all together in the end. It's a nice break up of all of the work.

An acknowledgment needs to go to @angleofsight for his help in getting this whole process set up. Without his paving of the way I wouldn't be anywhere near as far along as I am right now.

Saturday, March 28, 2009

Gov 2.0 Camp: Virtual Worlds

Virtual Worlds is a topic that I find popping up more and more. I've always taken it with a grain of salt, though, as most of the time I hear it in relation to talk about how it'll make everything better. I shy away from that kind of talk as I know that silver bullets don't exist.

Further, my only real experience with a virtual world stems from the time that I played World of Warcraft. So, in my mind there's extra baggage attached in that I have a hard time seeing how a virtual world could be more than a game. When I entered this session, I decided to try to leave behind my baggage or, at the very least, dispel some of it.

This session was given by members of the Federal Consortium for Virtual Worlds. There were four panelists, but I did not, unfortunately, get their names or contacts.

As I entered this session, I had one question in mind: Are virtual worlds useful for more than just playing?

Surprisingly, there are three government agencies which are using virtual worlds in some capacity: NOAA, NASA and the CDC. All three of these agencies use virtual worlds for information delivery and training. NASA may be the least shocking example here, though, as it makes sense for them to create, say, a virtual world of Mars and then use that virtual world to train rover drivers. It's NOAA that has the most fascinating use of virtual worlds.

NOAA Islands (see towards the bottom of the page under the heading "NOAA Virtual World") is a virtual world that runs in Second Life (a very popular virtual world). In NOAA Islands, one is allowed to create their own mini-planet and then strive to create a stable weather pattern on that planet. As you add one effect, though, its repercussions are seen in other parts of the mini-planet. If you add too much rain, well, you'll flood the crops that are growing in a valley or low-lying area. If you make the world too hot, you'll melt the polar ice caps. In this way, NOAA attempts to convey the intricacies of climate to the uninitiated.

Another example of using virtual worlds in unique and non-playful ways was anecdotally related by one of the panelists. He commented on a school of engineering that he knew of that was using virtual worlds in order to prototype the buildings and structures they were designing. Specifically of use was the ability to determine if handicap access was sufficient for a given building.

However, of all the examples given, the most common example given was that of collaboration. The idea was set forth that in today's world of budget cuts and massive organizational structures which can span a country if not the globe money could be saved if, instead of collaborating face-to-face, people could collaborate virtually.

To this, I asked the question of what advantages do a virtual world provide that more traditional forms of telecommunication don't? The response was both intangible and interesting.

Basically, the consensus from the panel was that virtual worlds provide a sort of solidification of knowledge based on their immersiveness. One presenter described it as "informational bandwidth". Virtual worlds, being immersive, allow one to convey large amounts of information faster. Further, this immersiveness adds context to the memories created, making the information conveyed more "solid" or "real". The information has a better chance of sticking due to the immersive nature of a virtual world.

This concept, that the immersiveness of a virtual world added to the quality of the information that was transmitted seemed to find fertile ground in the audience. One audience member stated that "where it is is what it is", meaning that the memory of something can be tied in no small part to the place where the memory was experienced.

However, someone else asked a very probing question: Do virtual worlds limit or enhance productivity?

The answer to this was less than satisfactory, in my mind: The worlds are getting better with productivity software. Currently, many virtual worlds allow for desktop sharing and persistence of environment. But, does "getting better" mean "good enough"? In my mind, no.

So, how did this session shape my opinion on virtual worlds? Are virtual worlds useful for more than just play?

Yes, they are useful for more than just play.

First and foremost, I was highly impressed by NOAA's forward thinking in this space. So many times allowing people to just get out there and attempt something is the best way to convince them of your point. Allowing people to experiment with climate by actually creating it is ingenious and something that NOAA should be commended for.

Further, I can see how virtual worlds will soon play a huge role in training. Being able to attempt a task in a similar environment to the environment in which you will actually be performing the task is of great value. I can see how this could lead to safer working conditions in hazardous work environments, from military applications to industry.

I can also see that the advantage to prototyping is huge. Being able to put yourself into a users shoes (as in the case of testing handicap access in a building which is to be built) is of immeasurable value. If you add realistic physics to that, well, you've simply added even more value to the tool.

However, the one place I remain unconvinced is in what is probably the most important space of all (as far as widespread adoption of virtual worlds is concerned). For virtual worlds to be adopted whole-heartedly across government and industry they must facilitate the work that people do. To hear that the tools for productivity are still developing in my mind means that they are not ready yet for the main-stream.

That's not to say that virtual worlds don't have potential value in this most important space, though. Indeed, I feel that they have great potential. But, until I can see an example of where they make collaboration as easy, or near as easy, as actual collaboration is in real life I don't see that they will be widely adopted for this purpose.

With all that said, I'm going to keep my ears out, and my mind open, to this topic. I think that there is great work that can be done here and I look forward to what the researchers of today will do with this technology.

Wednesday, February 11, 2009

How Does the Magician Get What He Wants?

As I started developing the core of the "platform" I realized that I had undertaken a huge task, a task I was not sure I could finish in any short amount of time. This caused me some angst but I figured that I would just have to move on, doing my best to develop my way out of an impossible situation. Not a fun position to be in.

As I started developing the core of the "platform" I realized that I had undertaken a huge task, a task I was not sure I could finish in any short amount of time. This caused me some angst but I figured that I would just have to move on, doing my best to develop my way out of an impossible situation. Not a fun position to be in.Then, sometime around the end of December, a buddy of mine pointed me in the direction of a product called Presto, developed by the company JackBe. I thought that I had stumbled into my own brain on the web!

Keep in mind that the concept I was working on was that the platform would map gadgets together in such a way that we could dynamically generate them with ease, rapidly prototyping processes to see how they worked. In effect, the gadgets would be web services and the thing the platform would create would be a mashup.

As I said, I had envisioned something similar to PopFly, the ability to visually create mashups and then do something meaningful with them in our efforts to prototype processes. Presto is this and so much more.

First and foremost, Presto has a beautiful visual mashup maker called Wires. In Wires, you drag services from a palette onto your canvas and connect them. You can also drag in actions, blocks that allow you to do something with the results of other services. So, in the basic example I worked with, you have two RSS feeds. You can take the data from each feed and "merge" them (where merge is an action block) so that you effectively have one RSS feed from two. Then, you can add a filter (another action) which can take a dynamic input. In this way, you can create very complex mashups from some very basic building blocks.

If the complexity of Wires is not enough for you, however, they have a markup language for creating mashups called EMML. What you do in Wires is distilled down to EMML, but Wires doesn't offer the full capabilities of EMML (which is not to say that Wires is not fully featured, there are just some rather tricky things you can do with EMML that you can't do with Wires as they don't have a visual representation).

What's even cooler, and something that spoke to a need we had, is that each mashup is published as a service itself. So, you can include mashups in other mashups! The modularity is great.

But, you might be asking, how do you get the services in so that you can use them from the palette?

Well, Presto has a "Service Explorer" which allows you to import services from wherever they may be and "publish" them in the Presto server. However, there's a little more to it than that. Presto comes complete with a user authentication system that can be standalone or hook into LDAP or AD. When I import a service, I can assign rights to it, and only those in the appropriate groups can even see the service, or any mashup in which the service exists. You can also assign rights to mashups themselves.

So, that gave us the mashing capability that we've been looking for, but the goodies in this bag didn't end there!

What we have up to this point is a unique view of the data, but no visualization of the data. Enter the mashlet!

A mashlet is a view of the mashup in a portable and embeddable package. It is created in JavaScript. Once you've created a mashup, you can attach a mashlet to it so that others can see what the mashup provides. There are 5 prebuilt mashlet types: RSS; grid; chart; Yahoo Map; and XML. If your data fits into any of these predefined views, creating a mashlet is as simple as selecting the mashup or service, selecting the view, then publishing it. If you need a more complex view of your data you can create a mashlet by hand. The process for creating a mashlet by hand is rather well thought out and not all that hard to grasp.

Once a mashlet is published you can do one of several things with it.

The mashlets are served up from the Presto server in much the same way that any JavaScript object is. Right out of the gate, you can view a mashlet standalone, if you so choose. However, the real fun comes when you realize that you can embed mashlets into any HTML page you wish by simply including a script tag. You can also embed them in a MediaWiki, NetVibes or as a GoogleGadget. You can't ask for more flexibility. From what I understand, there are more embeddable objects coming, including things such as JSR-168 portlets.

So, from our perspective, Presto offers us 3 incredible capabilities: the ability to capture services from across the web; the ability to create mashups in an easy and visual manner; and the capability to add a face to the services and mashups we create, then embed that face wherever we need.

We've committed to Presto as the core of our platform and it puts us months, if not years, ahead of where we were.